The hip word in development research these days is scale. For many, the goal of experimenting has become to quickly learn what works and then scale up those things that do. It is so popular to talk about scaling up these days that some have started using upscale as a verb, which might seem a bit awkward to those who live in upscale neighbourhoods or own upscale boutiques.

We have very little evidence about whether estimated effect sizes from pilots can be observed at scale. When we have impact evaluation evidence from the pilot, we rarely bother to conduct another rigorous evaluation at scale. Evidence Action, a well-known proponent of randomised controlled trial-informed programming, frankly states, “…we do not measure impact at scale (and are unapologetic about it)”.

The reasons are easy to understand. We are eager to use what we learn from our experiments or pilots, to make a positive difference in developing countries as quickly as possible. And impact evaluations are expensive, so why spend the resources to measure a result that we have already demonstrated? Especially when it is so much more expensive and complicated to conduct an impact evaluation at scale.

We do not always see the same effects at scale

A few recent studies, however, suggest that we cannot necessarily expect the same results at scale that we measured for the pilot. Bold et al. (2013) use an impact evaluation to test at scale an education intervention in Kenya that was shown to work well in a pilot trial. They find, ‘Strong effects of short-term contracts produced in controlled experimental settings are lost in weak public institutions.’ Berge, et al.(2012) use an impact evaluation to test what they call a local implementation of a business training intervention in Tanzania and conclude, ‘the estimated effect of research-led interventions should be interpreted as an upper bound of what can be achieved when scaling up such interventions locally’. Vivalt (2015) analyses a large database of impact evaluation findings to address the question of how much we can generalise from them and reports, “I find that if policymakers were to use the simplest, naive predictor of a program’s effects, they would typically be off by 97%.”

There are a number of reasons to expect that the measured effects from our pilot studies are not directly predictive of the impacts at scale. Most observers focus on the concept of external validity. Aronson et al. (2007) define external validity as, ‘the extent to which the results of a study can be generalised to other situations and to other people’. Based on that definition, external validity should not be the main problem of generalising from a pilot to a scaled implementation of the same intervention in the same place.

The problem is ecological validity

The concept I find more useful for the pilot-to-scale challenge is ecological validity. Brewer (2000) defines ecological validity as ‘the extent to which the methods, materials and setting of a study approximate the real-world that is being examined.’ If we want to go from pilot to scale and expect the same net impacts in the same setting, what we need to establish is the ecological validity of the impact evaluation of the pilot. There are however several potential threats to ecological validity. Here are some examples:

- Novelty effects. Some of the interventions that we pilot for development are highly innovative and creative. The strong results we see in the pilot phase may be partly due to the novelty of the intervention. The novelty may wear off at scale, particularly if everyone is now a participant or hears the same message. 3ie funded two impact evaluations that piloted cell phone lotteries as a way to increase demand for voluntary medical male circumcision. As it turned out, the pilots did not produce results, but if they had, I fear they would have measured a lot of novelty effect.

- Partial versus general equilibrium. A basic premise of market economics is that if everyone plays on the same level playing field (e.g. has the same information, faces the same prices), one will not be able to earn more profits than the other. That outcome is the general equilibrium outcome. By definition, pilot programmes designed to improve economic outcomes measure only partial equilibrium outcomes. That is, only a small sample of the ultimate targeted group receives the economic advantage of the intervention. If those in the treatment group operate in the same markets as those not in the treatment group, the pilot programme can introduce a competitive advantage; it can unlevel the playing field.

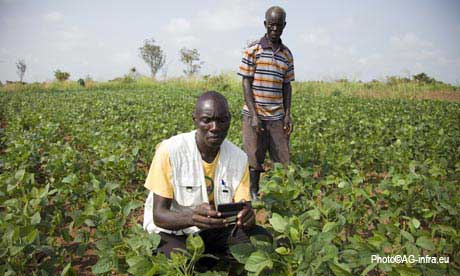

What is measured is not predictive of what will happen when the intervention is scaled up and the playing field is leveled. I saw an example of this in a pilot intervention that delivered price information to a treatment group of farmers through cell phones, while ensuring that the control farmers did not have access to the same price information. The profits measured for the treatment farmers who had superior information cannot be expected when all the farmers in the market get the same information.

- Implementation agents. This threat to ecological validity is one that Bold et al. and Berge et al.highlight, although they lump it under the concept of external validity. Researchers who want to pilot programmes for the purpose of experimentation often find NGOs or even local students and researchers to implement their programme. In order to ensure the fidelity of the implementation, the researchers carefully train the local implementers and sometime monitor them closely (although sometimes not, which is a topic for another blog post). At scale, programmes need to be implemented by the government or other local implementers not trained and monitored by researchers. But who implements a programme and how they are managed and monitored makes a big difference to the outcomes, as shown by Bold et al. and Berge et al.

- Implementation scale. What we hope when a programme goes to scale is that there will be economies of scale, i.e. the costs increase less than proportionately when you spread the programme over a larger group of beneficiaries. These economies of scale should mean that the cost effectiveness at scale is even better than what was measured for the pilot. Unfortunately, there can also be diseconomies of scale (i.e. costs increase more than proportionately) particularly for complicated interventions. In addition, at scale the programme may encounter input or labor supply constraints that did not affect the pilot. Supply constraints will be important to consider when scaling up successful pilot programmes for HIV self-tests, for example.

Unfortunately, if we cannot achieve the same results at scale, the findings from the pilot are of little use. Sure we can often collect performance evaluation data to observe outcomes at scale, but without the counterfactual analysis, we do not know what the net impact is at scale, which means we cannot conduct cost-effectiveness analysis. In allocating scarce resources across unlimited development needs, we need to be able to compare cost effectiveness of programmes as they will ultimately be implemented.

What can we do?

My first recommendation is that we pay more attention to the behavioural theories and assumptions and to the economic theories and assumptions that we explicitly or implicitly make when designing our pilot interventions. If our intervention is a cool new nudge, what is the novelty effect likely to be? How does our small programme operate in the context of the larger market? And so on.

My first recommendation is that we pay more attention to the behavioural theories and assumptions and to the economic theories and assumptions that we explicitly or implicitly make when designing our pilot interventions. If our intervention is a cool new nudge, what is the novelty effect likely to be? How does our small programme operate in the context of the larger market? And so on.

My second recommendation is that we conduct more impact evaluations at scale. I am not arguing that we need to test everything at scale. Working at 3ie, I certainly understand how expensive impact evaluations of large programmes can be. But when careful consideration reveals high threats to ecological validity, a new intervention should not be labelled as successful until we can measure a net impact at scale. Contrary to the arguments of those who oppose impact evaluation at scale, scale does not need to mean that the programme covers every person in the entire country. It just needs to mean that the programme being tested closely approximates, in terms of agents and markets and size, a programme covering the whole country. Put differently, an impact evaluation of a programme at scale should be defined as a programme impact evaluation with minimal threats to ecological validity.

My third recommendation is that we pay more attention to the difference between pilot studies that test mechanisms and pilot studies that test programmes. Instead of expecting to go from pilot to scale, we should expect more often to go from pilot to better theory. Better theory can then inform the design of the full programme, and impact evaluation of the programme at scale can provide the evidence policymakers need for resource allocation.

(This blog post is adapted from a presentation I gave at the American Evaluation Association 2015 conference. Registered users can see the full panel session here.)